Taylor & Francis – Harnessing the Power of Knowledge

Who we serve

Discover our Research

Access millions of peer-reviewed journal articles

Publish your Research

Enhance your career and make research impact

Explore our Books

Reference-led content in specialist subject areas spanning the Humanities, Social Sciences, and STEM.

Content and Research Platforms

Taylor & Francis offers a range of content platforms to connect readers to knowledge. They are built around customer needs with the aim of facilitating discovery and allow users to access relevant research and information quickly and easily, wherever they are.

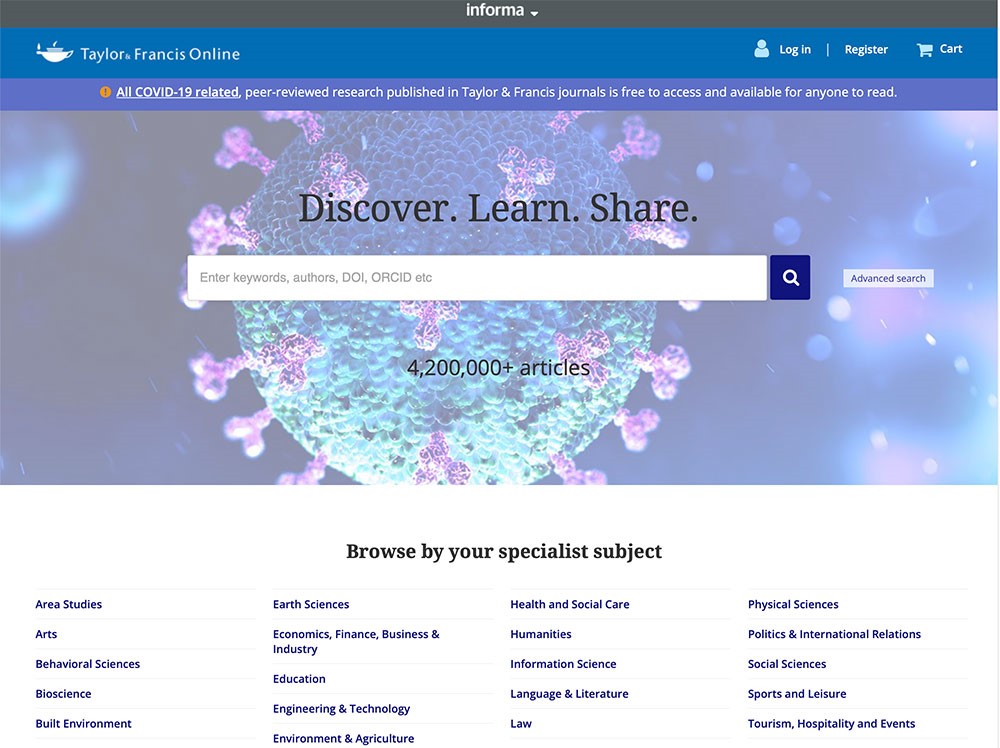

Taylor & Francis Online

Provides access to more than 2,700 high-quality, cross-disciplinary journals spanning Humanities and Social Sciences, Science and Technology, Engineering, Medicine and Healthcare.

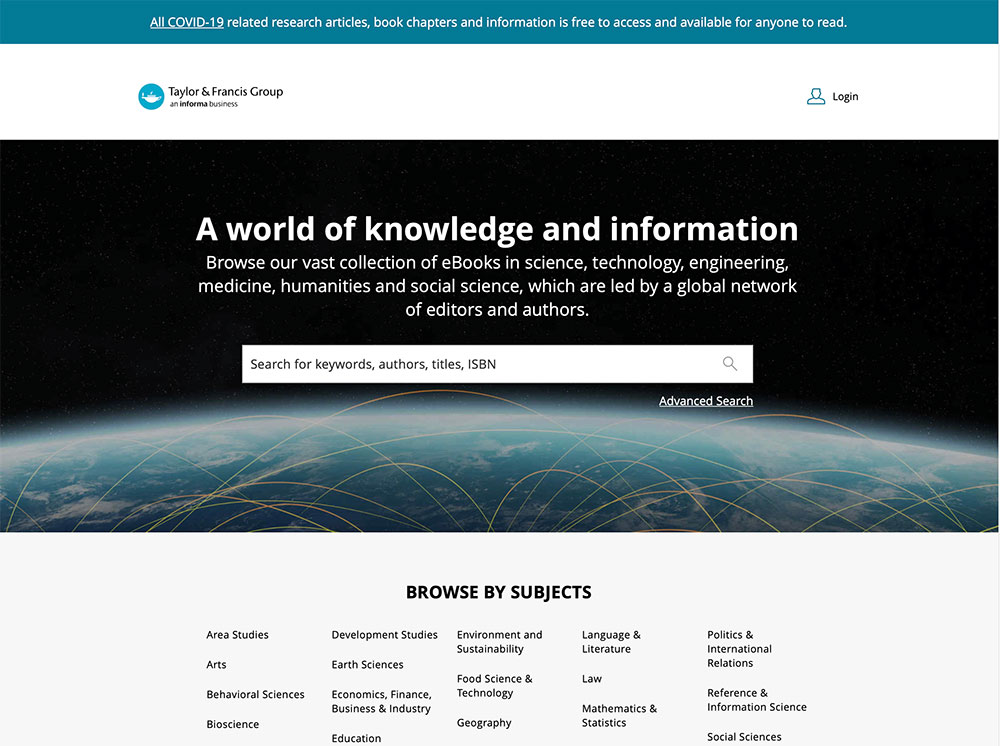

Taylor & Francis eBooks

One of the world’s largest collections of eBooks in science, technology, engineering, medicine, humanities and social science.

F1000

An open research publisher providing rapid, transparent publishing solutions for a diverse range of partner organizations, as well as directly to researchers via our publishing platforms, such as F1000Research.

Subject Areas & Disciplines

Engineering

- Automotive Engineering

- Biomedical Engineering

- Chemical Engineering

- Civil Engineering

- Electrical Engineering

- Energy & Oil

- Engineering, Computing & Technology

- Environmental Engineering

- General Engineering

- Industrial & Manufacturing Engineering

- Materials Science & Engineering

- Mechanical Engineering

- Mining Engineering

Medicine & Healthcare

- Addiction & Treatment

- Allied Health

- Anesthesiology

- Behavioral Health and Medicine

- Cardiology

- Clinical Medicine

- Dentistry

- Dermatology

- Endocrinology

- Expert Collection

- Hematology

- Hospitals and Health Systems

- Immunology

- Infectious Diseases

- Nephrology

- Neurology

- Nursing

- Oncology

- Pediatrics

- Pharmaceutical Sciences

- Psychiatry

- Public Health

- Radiology

- Substance Use & Misuse

- Surgery

- Urology

- Veterinary Medicine

- Women’s Health

Humanities & Social Sciences

- Area Studies

- Arts

- Behavioural Sciences

- Built Environment

- Business & Management

- Communication Studies

- Economics

- Education

- Finance

- Geography

- Global Development

- History

- Humanities and Social Sciences

- International Relations

- Language

- Law

- Literature

- Museum and Heritage Studies

- Philosophy

- Politics

- Psychology

- Religion

- Routledge Encyclopedia of Modernism

- Routledge Handbooks Online

- Sociology

- Tourism, Hospitality and Events

- Urban Studies

- World Who’s Who

China

China